Using AI onboard of small sats - OSCW 18

This year for OSCW we have measured the power consumption of two edge computing devices (the TX1 and the Movidius) to know if they could be potentially embed on a Cubesat. What's the future of AI for Cubesats?

Last year I attended to the first edition of the OSCW at ESOC (Darmstadt, Germany) and I met a lot of interesting people and projects (you can read what I wrote about it here). It was great, but I didn't bring any project or talk and this year I wanted to put in my two cents.

This was my first time contributing to such an event and my first poster, and it would not have been possible without the assistance of Dr. Redouane Boumghar. He's an open source enthusiast and he happens to have a PhD in robotics and work for ESA. Not too bad!

Red and I are interested in applying AI to solve all kind of challenges in the space industry. I, as an embedded systems engineer, am specially interested on using it onboard of small satellites, so I proposed him to collaborate on this project.

Motivations

There is a new trend in the electronics industry, the edge computing. Basically, after all these years promoting the benefits of the Internet of Things, we've finally accepted that having million of dumb connected devices lying around may not be very clever. Instead, manufacturers are now trying to create more powerful and efficient devices which can do most of their job without the need of third parties. Incidentally, this attempt to decentralize products is not only a hardware thing. We are seeing a similar trend on web services.

I really empathize with this idea because of the advantages it has in terms of latency, security and privacy, but what does this have to do with space? Well, the fact of having the capability to execute computationally intensive algorithms using low power opens also a wide range of opportunities for small satellites, in particular the one we're most interested on: the availability of AI onboard.

The fact of having the capability to execute computationally intensive algorithms using low power means the availability of AI onboard can become a reality.

The main reason why we started this project and why we think having this new capability is important is because the number of small sats and constellations is rapidly growing. It's the new space era, and as the number of objects sent into orbit grows, we need to find ways to increase their degree of autonomy. Otherwise constellations will become very limited and difficult to manage.

We already knew that these new tools exist. Edge computing is a reality (check out this repo where we're compiling a list of them), but we realized it was difficult to compare them on paper. The second reason to do this research is because we needed a more empirical approach to know which of these devices are really suited to go to space.

Last but not least, we intended to reveal how proprietary hardware behaves so that anyone creating open source computing hardware can compare them and have key performance indicators.

The comparison

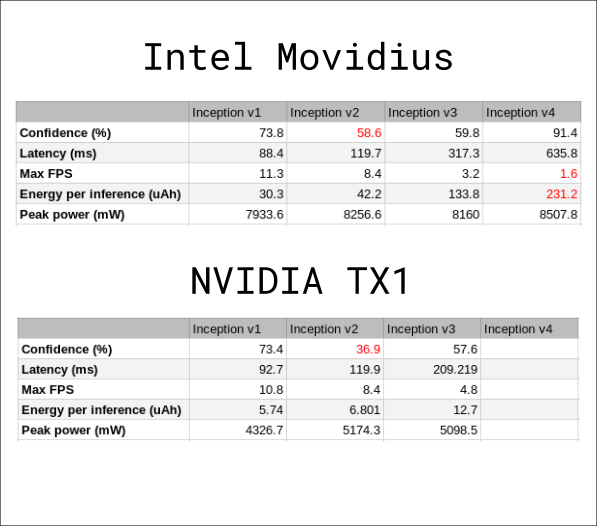

We wanted to know how feasible would it be to embedded some of this devices on a satellite. To do so we picked two of the most extended ones: the NVIDIA Jetson TX1 and the Intel Movidius. Then we run the same algorithms on the two platforms and measured several key parameters.

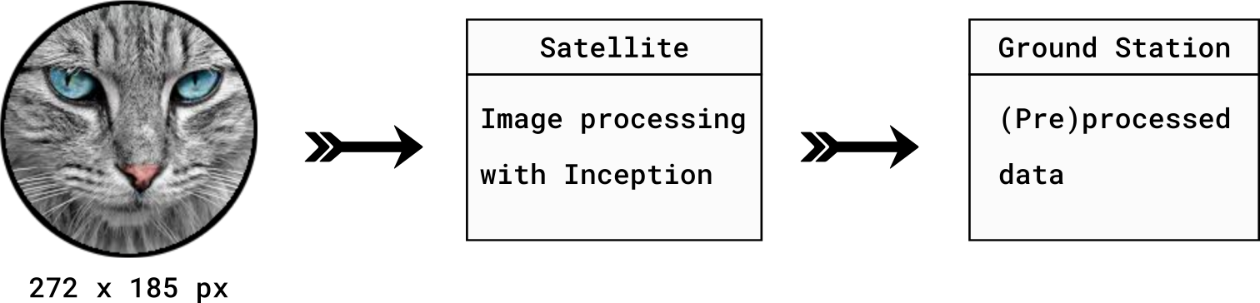

The chosen algorithm was Inception, one of the most extended deep-learning-based image classifiers. There are 4 different versions of Inception, but for our goal, all we need to know is that each version adds more layers and complexity than the previous one. We should expect higher power consumption and bigger latency from Inception v4.

A similar algorithm could be trained on ground and embedded to segment and classify satellite imagery. We decided to use it because there were implementations available for the two platforms and it allowed us to focus on the measurements.

Before sharing the results, we want to point out that the goal of the research was not to obtain the most accurate power consumption measurements, but the figures should be accurate enough to get an idea of what can be done in a Cubesat and what not.

Now, let's see the results. The complete data can be downloaded from the following link: https://github.com/crespum/oscw18-edge-ai/.

First of all we tried to get some values that indicate how the Neural Network is performing: confidence of the most likely output, latency (i.e. the time that takes to process an image) and maximum FPS. We admit that calling the latter parameter Frames Per Second is a bit misleading as this is typically used for video and that's not the problem Inception tries to solve. In our case, by FPS we mean how many inferences can be inferred per second or in other words, how many images can be classified per second.

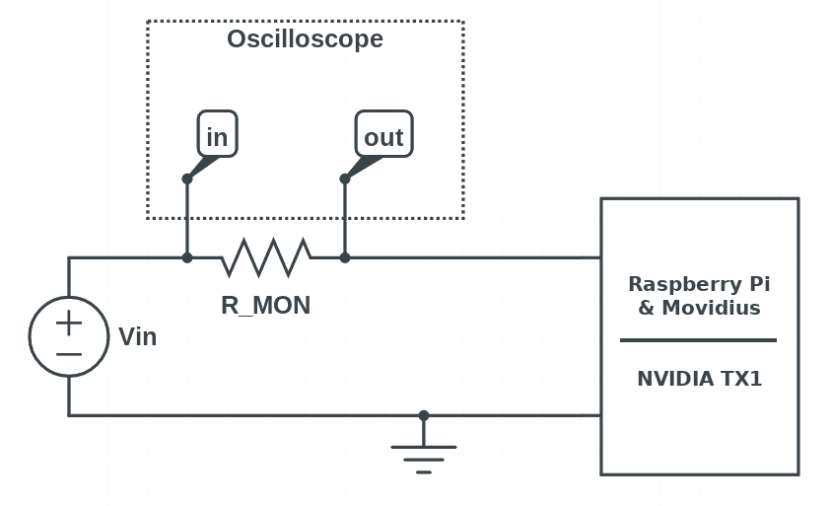

We also calculated two power-related values: the energy that takes to execute inference on a single image and the peak power consumption. To obtain the former we have subtracted the static current consumption (i.e. the current drained by the device before executing our script). The table below shows these numbers as well as some highlighted some values in red because we think the are meaningful somehow. We explain this in the next section.

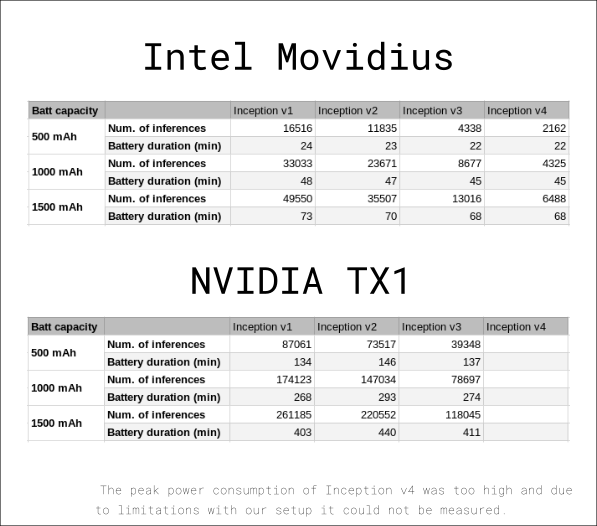

These other two tables that we show next contain some battery benchmarks. We chose some reasonable capacity values and calculated how long those batteries would last.

Notice that we could not take valid measurements for Inception v4 on the TX1. The reason that our power supply couldn't output enough current at the peak power point. In result, the board shut down when before showing the inference ended.

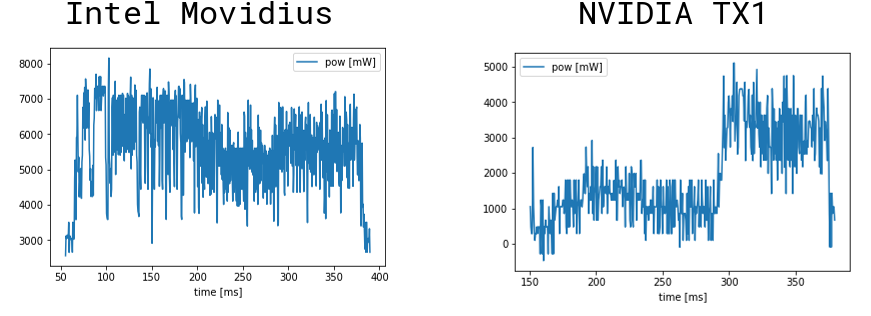

Finally, in the project repository you will also find graphs inside the different Jupyter Noteboks that represent the how power consumption changes with time. Below you can find an example of Inception v3 power consumption.

Conclusions

After going through all the data, we want to remark the following ideas:

- With the NVIDIA TX1 we can run inference on up to 80k images with only 500 mAh of energy. With that same amount of energy the Movidius can process just 16k images.

- Both of them are far from being used for video processing, because we were using a really small image (272 x 185 px) and the highest FPS we could achieve is would be ~11. Nevertheless, as we said before this benchmark doesn't actually add too much value because Inception is not designed to classify images on video.

- Battery life (at max FPS) is almost constant on every version of Inception.

- It's also curious how Inception v2 yields pretty bad results.

To sum up, there is clearly a winner, the TX1. The latency is lower and so it is the peak power consumption as well as the energy consumed. However, it's also worth to mention the price, as the TX1 costs three times the Movidius.

How can we do better?

At first we tried to develop our own algorithm to segment clouds from satellite images. This was of course very interesting, but it was not an easy task because not only we had to create the algorithm but we also had to adapt it to each platform. It took some time to admit that this was not the main goal of the project and that there were faster ways to achieve our goals.

With respect of the procedure to obtain an accurate power consumption, some engineers are using +1000€ wattmeters to measure how many pJ each mathematical operation of a deep learning network takes. If we could configure the oscilloscope to use more significant figures when exporting data as well as to increase the number of samples -currently limited to 3 significant figures and 1200 samples-, we could obtain those kind of measurements with a relatively low cost setup (~300€).

What's next?

Before the workshop we already had some ideas of what to do after in mind, however we decided to discuss them in a workgroup and it happened to be a very good idea, because we got feedback from many different people. We can classify the applications that emerged during this discussion into four different categories: image classification and segmentation, telemetry analysis, guidance and navigation, and finally, robotics.

Image classification and segmentation

Using AI for image classification and image segmentation is pretty common nowadays. Interestingly enough, there was a real need for one of OSCW members: Tanuj Kumar. He is part of a team who is developing a Cubesat with an hyperspectral camera at BITS Pilani (India) and one of the issues they have to deal with is the size of the images. We could use AI for either compress the image, analyse it to download only the parts we are interested on, discard invalid data such as cloudy images or even download only the results we need.

For this kind of application we would need a custom hardware like the NVIDIA Jetson. Since this is not always an option, I suggest to explore the usage of ARM Cortex M series microcontrollers for Convolutional Neural Networks. This was initially part of our plans thanks to uTensor, but it turns this framework doesn't support the convolution operation yet.

Telemetry analysis

This is the field we are more interested on since we already know there is also a real need for this, as my colleague Red is doing similar things at ESA.

What we propose is to have a system capable of analysing the telemetry of a satellite looking for complex time series. The results should detect if something is going wrong or even help us prevent future failures by predicting future values. To train a network we would like to try using data extracted from the SatNOGS Network. If the amount of data is big enough, we could use what we learn for other satellites in LEO. The goal would be to integrate this as part of the onboard software.

Guidance and navigation

Artificial Intelligence is very useful in unknown environments and unpredictable scenarios. What could have happened to Philae, the robot that accompanied the Rosetta spacecraft and landed on comet 67P/Churyumov–Gerasimenko, if it could use AI during descent? Would the mission have been even more successful?

Robotics

This was the field we explored the less, but some people also proposed using intelligent robots for helping humans during space travel and in future colonies.

I propose tackling the first two ideas because the other ones seem too far-fetched at the moment:

- Running CNNs on a Cortex M: I already got in touch with uTensor's developers. They think it should be feasible in a near future and we've been invited to contribute to the project.

- Analysing LEO telemetry from the SatNOGS Network: we see two tasks that can be done simultaneously, the first one would be to retrieve the data and give it a proper structure and the other one would consist in studying which algorithms can be used to detect and predict failures. I also suggest aiming for running this also on an ARM Cortex M because the number of satellites that would be able to use it would grow exponentially.

If anyone is interested on helping us on any of this ideas, please contact me or Red and we will be pleased to have a chat with you. We will be opening a thread on Libre Space Community to continue the discussion and coordinate the next steps.

Thanks to everyone who has shown interest and specially thanks to the organizers for making OSCW possible. ¡Hasta el año que viene!

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License